Amplyfi - Making AI Insights Accessible and Trustworthy

2021

Client

Amplyfi

Context

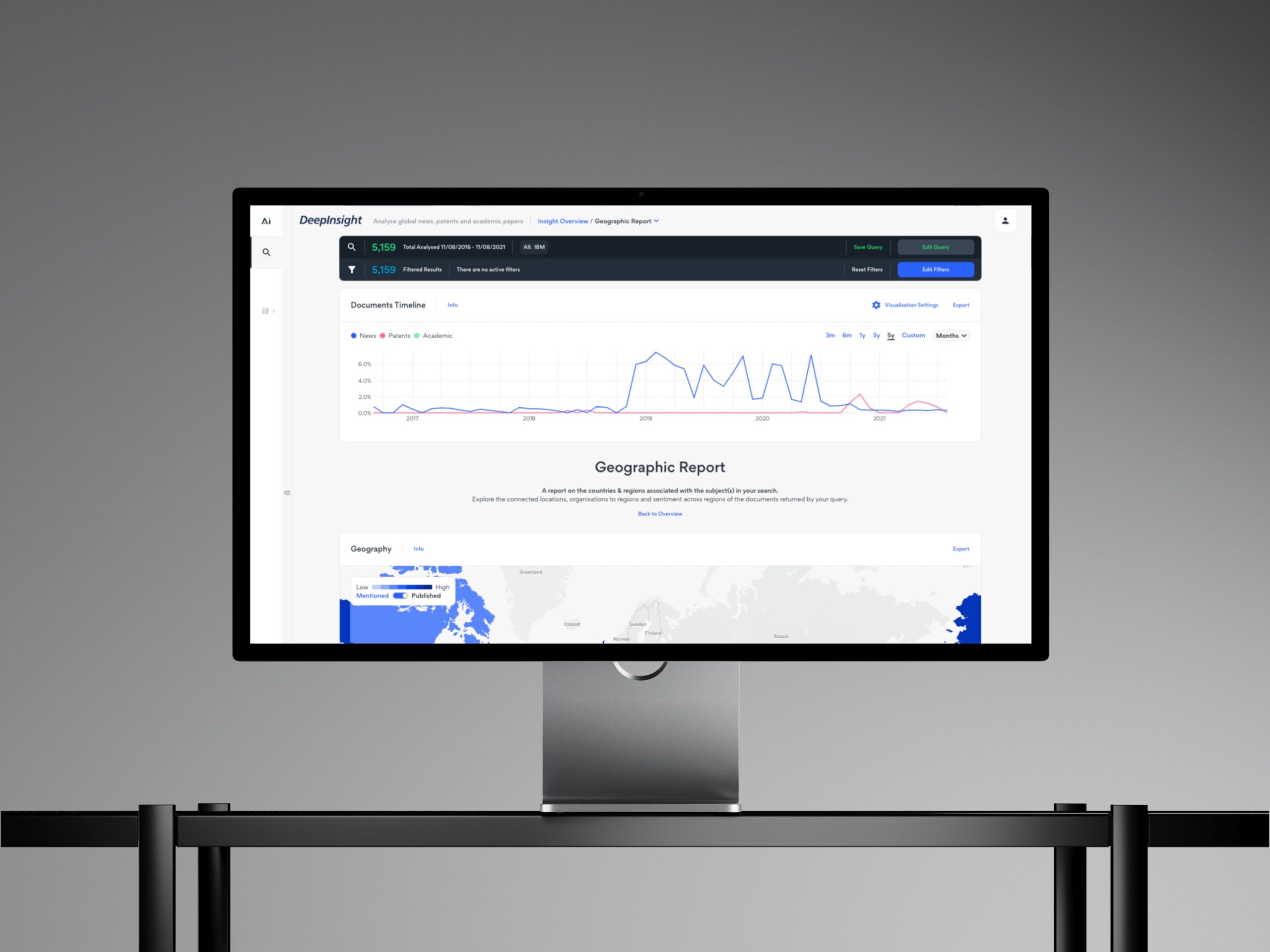

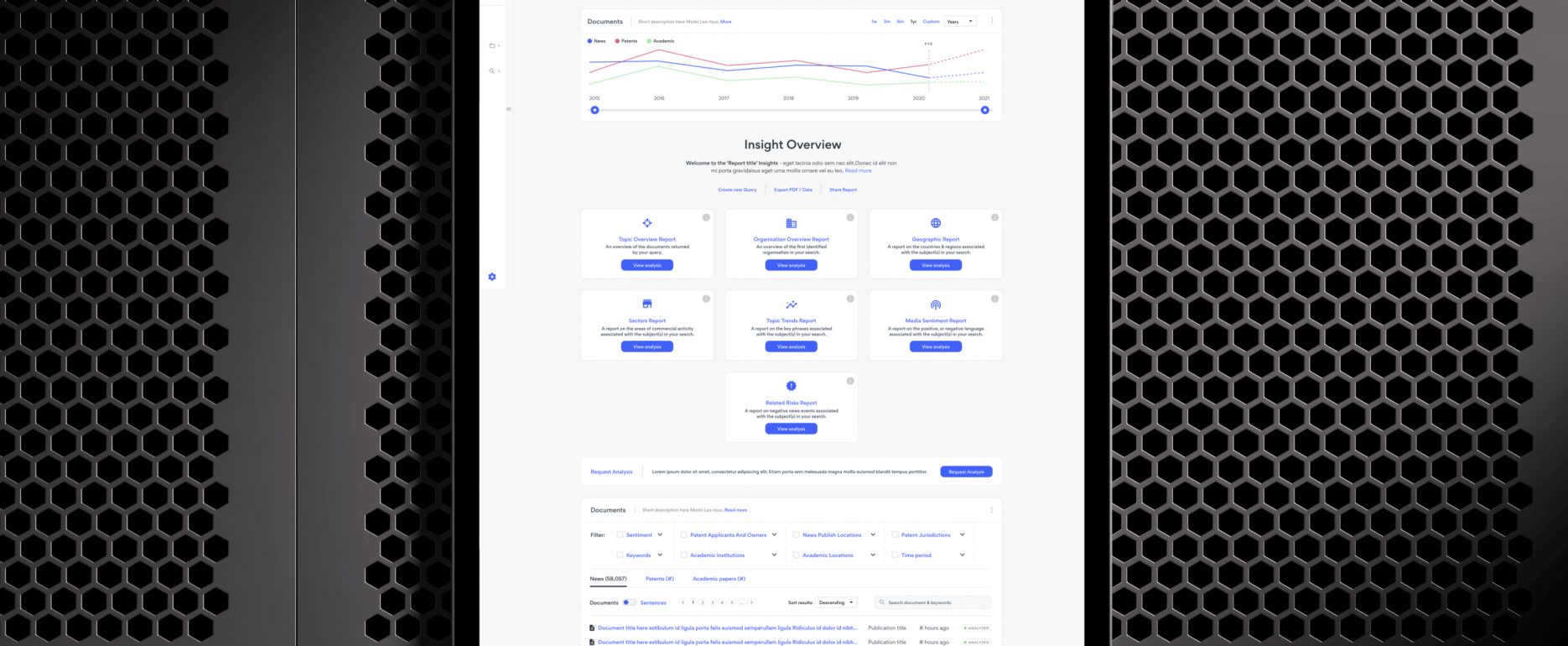

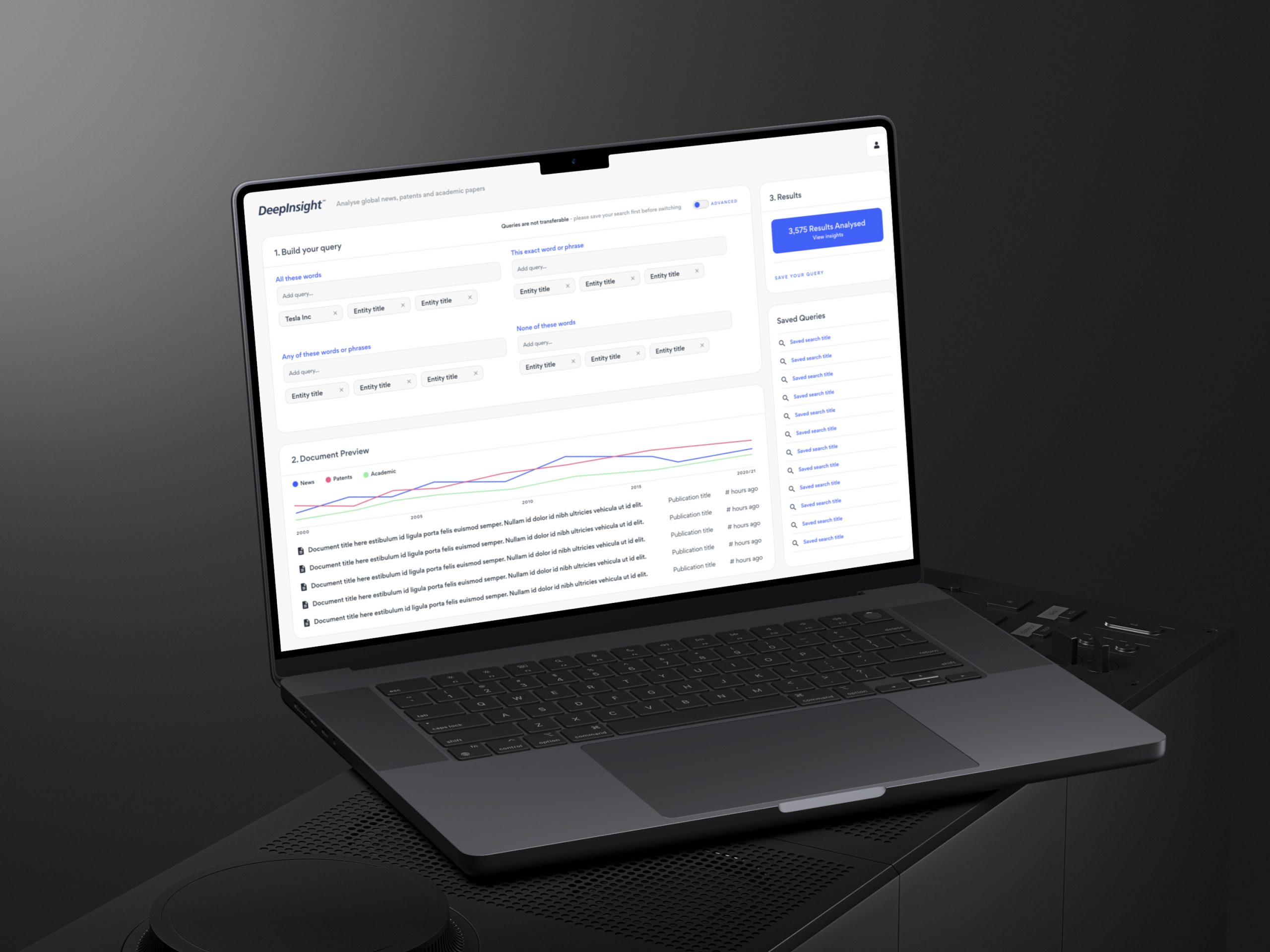

Amplyfi is an AI startup that provides research and intelligence tools for analysts and decision-makers. DeepInsight is an unstructured data tool that enables real-time research and analysis to support horizon scanning, competitor monitoring, and market risk assessments across 100+ million documents from news, patents, and academic papers.

The brief was to deliver a working MVP within six months that could handle daily incremental data loads for near real-time research, support private data repositories, and surface insights from proprietary ML and NLP models.

My role was to help connect the dots between technology and user-centred design, bringing the product from concept to public beta release.

The challenge

The existing platform created significant barriers for new users:

- Opaque AI processes made users uncertain about how insights were generated

- Expert-focused interface assumed deep knowledge of research methodologies

- Steep learning curve meant onboarding took weeks instead of days

- Complex workflows obscured the path from document upload to actionable insights

- Low feature adoption as users stuck to familiar patterns rather than exploring capabilities

The challenge was to make machine-generated insights both clear and trustworthy for users across different skill levels, from beginners to seasoned analysts.

Research and Insights

I led a structured discovery phase to ground the design in real evidence:

Market and competitive research:

- Conducted comprehensive competitor analysis across AI research platforms

- Identified gaps in how existing tools presented machine-generated insights

- Found that most platforms prioritised power over clarity, creating accessibility barriers

Discovery workshops:

- Facilitated sessions with stakeholders and engineering teams to understand technical capabilities and constraints

- Aligned teams on how to surface proprietary ML/NLP model outputs

- Defined success criteria for the MVP

User research:

- Led design discovery through interviews with analyst teams and subject matter experts

- Identified key friction points in document upload, tagging, and insights generation

- Developed personas through user interviews representing beginner, intermediate, and expert researchers

- Created experience maps showing current research workflows

Key insights:

- Users didn’t understand why the AI made certain decisions

- Beginners needed more guidance; experts wanted speed and configurability

- Users lacked confidence in AI-generated outputs without context

- The linear workflow didn’t match how people actually conducted research

- Success metrics weren’t visible, making it hard to judge research quality

- Near real-time updates needed clear visual indicators to show data freshness

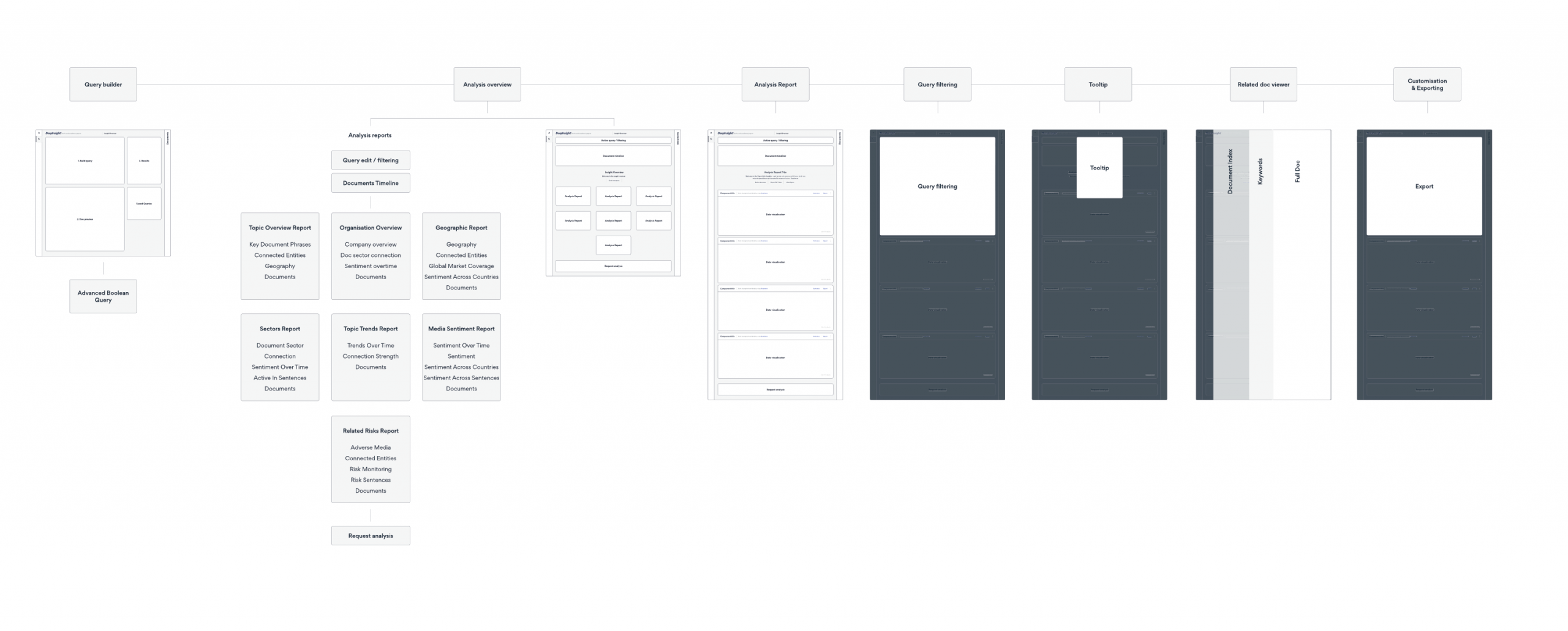

Early stage user flow mapping.

Process

Working within a six-month timeline, I established a comprehensive design approach:

- Competitor research and analysis to understand the landscape and identify opportunities

- Discovery workshop facilitation with stakeholders and engineering teams to align on vision and technical constraints

- Persona development and experience mapping through user interviews to understand different researcher needs

- Product definition including roadmap development and feature prioritisation

- Concepts and sketches to explore different approaches to surfacing AI insights

- Wireframes and basic prototypes to test core user flows with stakeholders and users

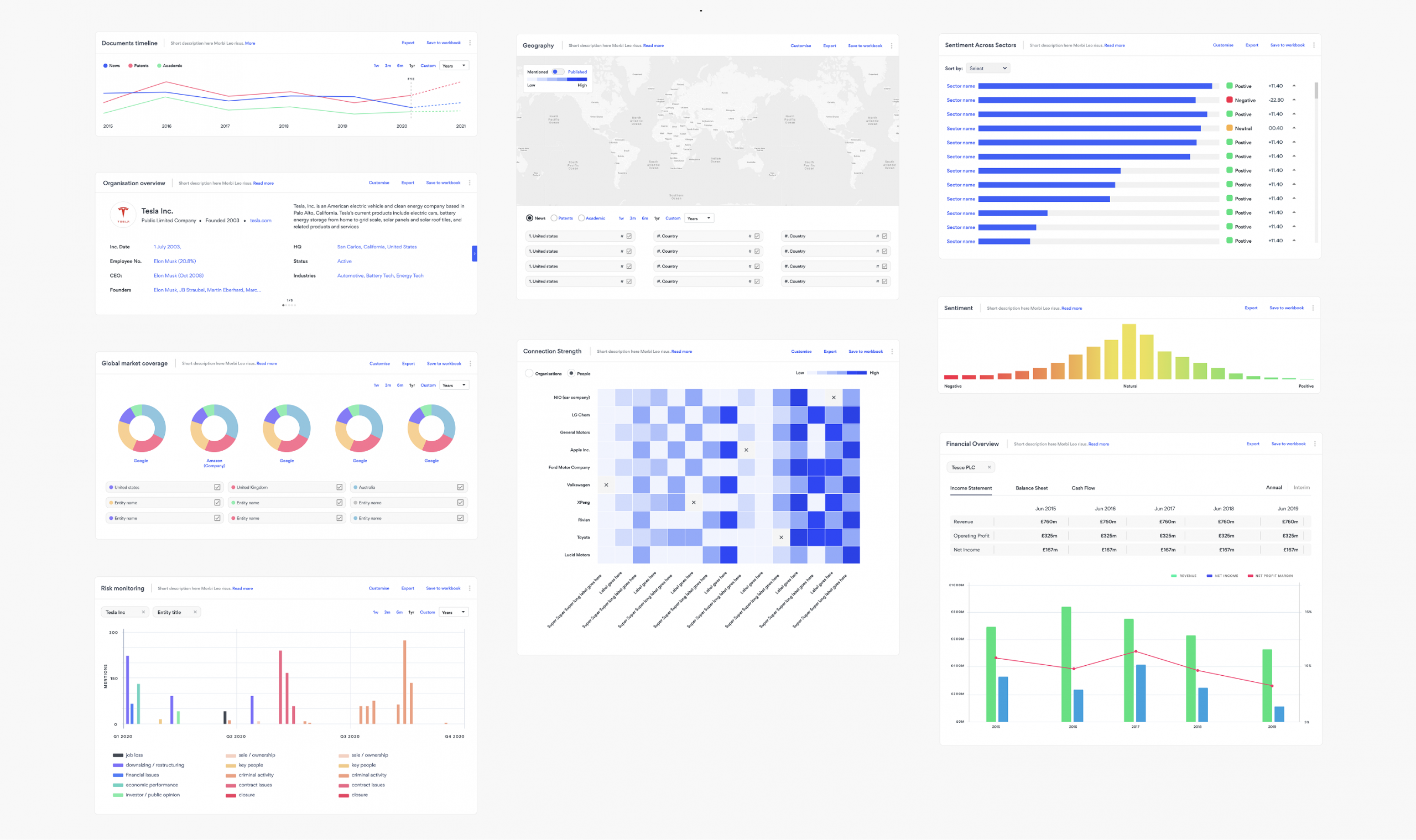

- Design system creation built from scratch to ensure consistency across the platform

- Data visualisation library with 20+ custom components for different insight types

- High-fidelity design and presentation with regular feedback sessions to validate direction

- User testing, analysis and iteration based on real user behaviour and feedback

- Feedback workshops to refine and improve the experience throughout development

I maintained close collaboration with data scientists and engineers throughout to ensure designs were technically feasible and could surface complex ML/NLP outputs in ways users could understand and trust.

Data visualization – component design system.

Solution

I introduced a redesigned interface that balanced guidance with flexibility:

Guided workflows:

- Step-based onboarding experience scaffolded learning for new users

- Contextual explainer cards revealed how AI generated specific insights

- Confidence indicators showed the strength of AI-generated connections

- Progressive disclosure revealed advanced features as users gained competence

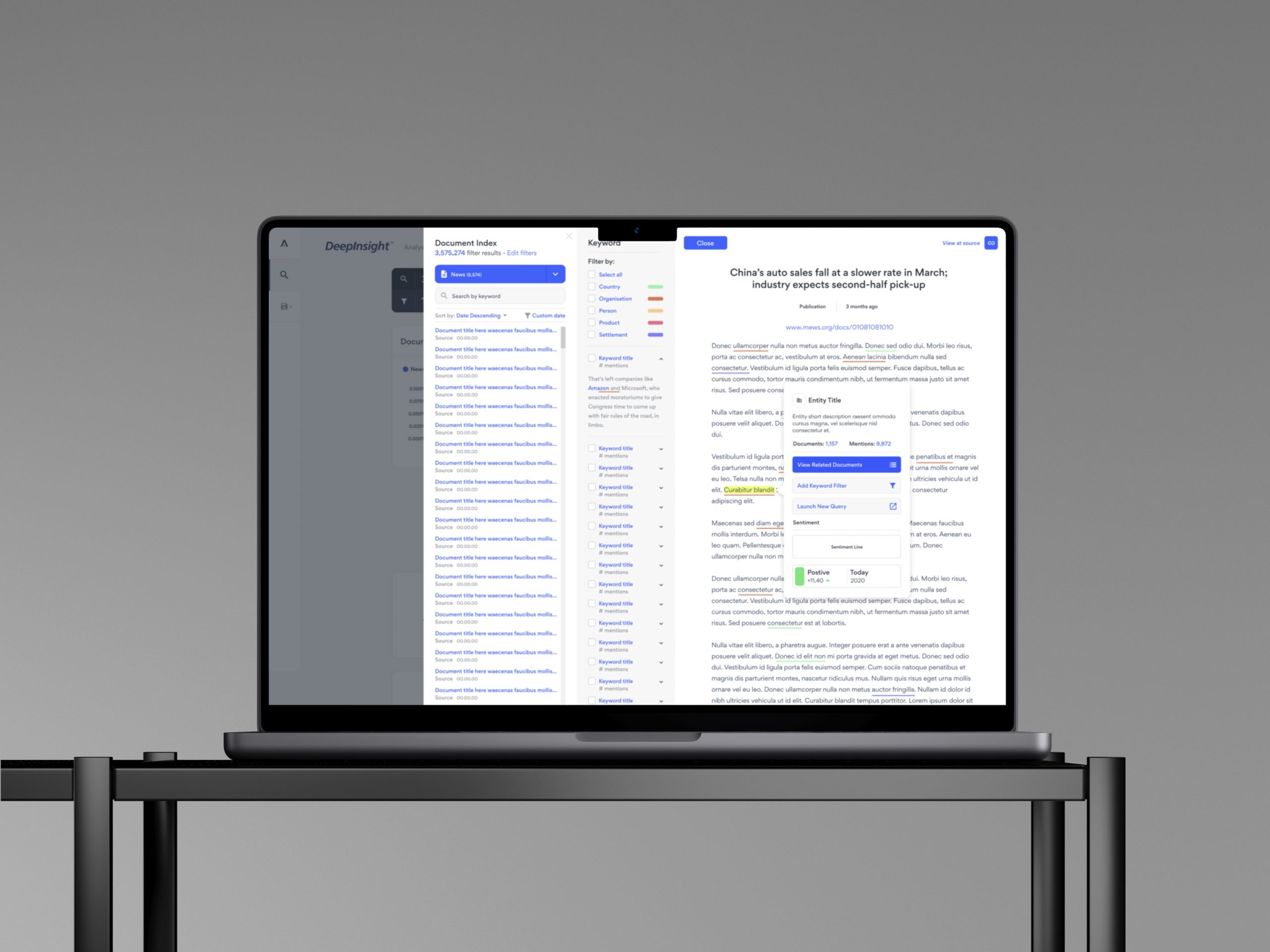

Enhanced transparency:

- Tooltips explained technical concepts in plain language

- Visual indicators showed which documents influenced each insight

- Alternative interpretations were surfaced when confidence was lower

- Users could drill down into source material to verify AI claims

Flexible navigation:

- Persona-based entry points catered to different user goals

- Expert users could bypass guidance and jump straight to advanced features

- Saved workflows enabled teams to share successful research patterns

Key Design Decisions and Challenges

Making AI decisions visible and trustworthy

The core challenge was translating complex NLP processes into understandable visual patterns. I worked closely with data scientists to understand what the algorithms were actually doing, then designed visual explanations that built user confidence without oversimplifying.

Balancing guidance with speed

Expert users didn’t want hand-holding, but beginners needed structure. I solved this by creating a guided default path that could be collapsed or skipped entirely. Power users appreciated the option to learn advanced features at their own pace.

Designing for uncertainty

AI-generated insights aren’t always certain. Rather than hiding this, I designed confidence indicators and alternative interpretations that helped users make better judgements about when to trust the system and when to dig deeper.

Impact

User improvements:

- 40% reduction in onboarding time through guided workflows

- 60% increase in AI feature usage via improved clarity and visibility

- Positive user feedback specifically noting improved trust in AI outputs

Business outcomes:

- Successful beta release within the six-month MVP timeline

- Expanded addressable market beyond expert analysts

- Reduced customer support burden as users became more self-sufficient